Startup-led innovations are enhancing the detection of manipulated content and enabling more secure digital environments, according to data and analytics company GlobalData.

The finding coincides with OpenAI’s recent introduction of a deepfake detector designed to identify content produced by its image generator, DALL-E.

A group of disinformation researchers will gain access to the tool for real-world testing. GlobalData says transformative technologies like AI-driven deepfake detection, real-time monitoring, and advanced data analytics are revolutionising digital security and authenticity.

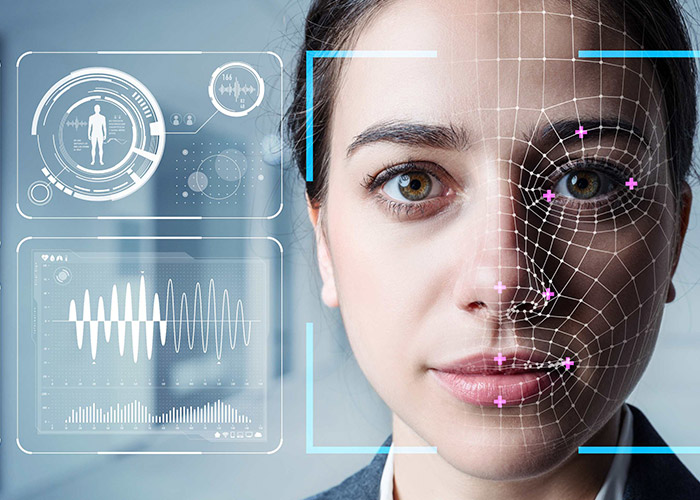

“AI-generated deepfakes have become increasingly sophisticated, posing significant risks to individuals, businesses, and society,” says GlobalData’s Vaibhav Gundre. “However, cutting-edge detection methods powered by machine learning are helping to identify and flag manipulated content with growing accuracy. From analysing biological signals to leveraging powerful algorithms, these tools are fortifying defences against the misuse of deepfakes for misinformation, fraud, or exploitation.”

GlobalData’s Disruptor Intelligence Center Innovation Explorer database highlights several pioneering startups tackling deepfake detection.

Sensity AI uses proprietary API to detect deepfake media such as images, videos, and synthetic identities. The detection algorithm is fine-tuned to identify unique artifacts and high-frequency signals characteristic of AI-generated images and signals that are typically absent in natural images.

DeepMedia.AI’s deepfake detection tool DeepID uses pixel-level modifications, image artifacts, and other signs of image manipulation for image integrity analysis. For audio, it uses characteristics such as pitch, tone, and spectral patterns to ensure authenticity. For video, it uses frame-by-frame analysis of visual characteristics such as facial expressions, body movements, and other visual elements.

Attestiv updated its online platform in January 2024 to detect AI-generated fakery and authenticate media, offering real-time security against sophisticated deepfakes in videos, images, and documents. It uses advanced machine learning to analyse images at pixel-level, visually overlaying heatmaps on the images, demonstrating how and where the images might have been manipulated.

“These advancements in deepfake detection are transforming cybersecurity toward ensuring digital content authenticity,” added Gundre. “However, as this technology evolves, we must critically examine the ethical considerations around privacy, consent, and the unintended consequences of its widespread adoption. Striking the right balance between protection and ethical use will be paramount in shaping a future where synthetic media can be safely leveraged for legitimate applications.”